Governing General Purpose AI — A Comprehensive Map of Unreliability, Misuse and Systemic Risks

Policy Brief

Executive Summary

Download the full report

The recent months have been marked by a heated debate about the risks and benefits of increasingly advanced general purpose AI models and generative AI applications building on them. Although many stress the huge economic potential of these models, a variety of incidents ranging from an AI-generated livestream filled with transphobia and hate speech to an experiment with a general purpose AI-based agent that was given the aim to "destroy humanity", and fears about disruptions to our education system have led to escalating concerns about the risks stemming from these models. While only a few well-resourced actors worldwide have released general purpose AI models, hundreds of millions of end-users already use these models, further scaled by potentially thousands of applications building on them across a variety of sectors, ranging from education and healthcare to media and finance.

The diversity of potential risks of these models, combined with their rapid advancement and integration into everyday life, have provoked policy interventions around the world – including in the EU, US and UK, in transatlantic dialogues, and among the G7. Amongst these domestic and multilateral activities, the European Union has so far gone the furthest. There is already a strong political will to establish the most effective rules on general purpose AI providers in the EU AI Act, one of the first legal frameworks on AI.

While a strong EU AI Act is essential to comprehensively address the many risks stemming from general purpose AI models, the sheer diversity, scale and unpredictability of hazards demand additional policy actions. The pioneering legislation of the EU AI Act represents an essential cornerstone in comprehensively governing general purpose AI models, by putting direct rules for these models in place. Through the Brussels effect, it is possible for these rules to spread to other jurisdictions. Given the diversity of risks, however, additional policy action is needed. This could include, for example, education programmes for decision-makers and the general public, redistributive policies, industrial policy for trustworthy AI, funding for AI ethics and safety research, and international agreements considering the global impact of this technology.

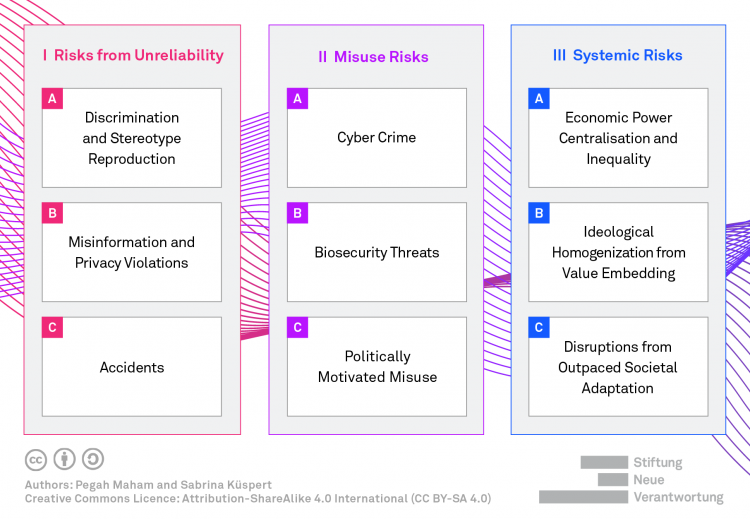

Policymakers should get a comprehensive understanding of the whole range of risks associated with general purpose AI models, to proactively mitigate these hazards. This report maps these potential risks across three categories: Risks from Unreliability, Misuse, and Systemic Risks. Illustrated with currently observable examples and relevant scenarios, we outline a comprehensive set of nine relevant risks across three primary risk categories. This risk map provides a structured resource for policymakers seeking to understand the multifaceted challenges of general purpose AI and their potentially far-reaching impact to effectively govern them. It is critical for policymakers to identify risks stemming from this rapidly-advancing technology, weigh their implications, prioritise and address them adequately, ensuring that all risks are covered.

Firstly, Risks from Unreliability arise as there is currently no solution to ensure that general purpose AI models behave as intended, to predict and control their behaviour fully. This gives rise to risks of Discrimination and Stereotype Reproduction, Misinformation and Privacy Violations, and Accidents. General purpose AI models make decisions based on complex internal mechanisms that are not yet understandable, even to their developers. This results in a lack of reliability, transparency, controllability, and other key features of trustworthiness. In the case of agentic models, it makes it challenging to ensure that the models pursue goals that align with human objectives and values. Therefore, firstly, models risk discrimination and the reproduction of stereotypes by exhibiting or amplifying biases present in their training data. Secondly, models can disseminate false or misleading information, omit critical information, or produce true information that violates privacy. Lastly, these models pose risks of accidents from unexpected failures during development or deployment, which could scale with advancing capabilities and agency as well as wider integration of models, leading to concerns over catastrophic or even existential risks.

Secondly, general purpose AI models are inherently dual-use, meaning that they can serve both beneficial and harmful purposes, making them susceptible to misuse by malicious actors. This could increase speed, scale, and sophistication, for example, in Cyber Crime, Biosecurity Threats, and Politically Motivated Misuse. Actors seeking to misuse these tools could do so without building their own advanced models, but instead by using models without appropriate safeguards or bypassing them, by leveraging available open-source models, or by using models leaked or stolen from AI labs. Firstly, general purpose AI models could make cyber crimes leveraging IT systems, such as fraud, more sophisticated and convincing, and could also be used to target IT systems, for example, through phishing emails or assisting in programming malicious software. Secondly, general purpose AI models could facilitate the production and proliferation of biological weapons, by making critical knowledge more accessible and reducing the barrier for misuse. Lastly, if misused with political motivations, these models could exacerbate surveillance efforts or existing tactics for political destabilisation, such as disinformation campaigns.

Thirdly, further Systemic Risks arise from the centralisation of general purpose AI development and the rapid integration of these models into our lives. This risks Economic Power Centralisation and Inequality, Ideological Homogenization from Value Embedding, and Disruptions from Outpaced Societal Adaptation. General purpose AI models become increasingly integrated into public and private infrastructure as the foundation for further applications and systems, yet they are almost exclusively developed by a few companies, dominated by Big Tech and their investees. Firstly, this risks that economic power is increasingly centralised amongst a few actors with a certain level of control over access to this technology and its economic benefits, possibly feeding into inequality within and between countries. Secondly, as developers inscribe certain values and principles into a general purpose AI model, this risks centralization of ideological power, producing models that are not fit to adapt to evolving and diverse social views or that create echo chambers. Lastly, overly rapid adoption of this technology at scale might outpace the ability of society to adapt effectively, leading to a variety of disruptions, including challenges in the labour market, the education system and public discourse, and various mental health concerns.

The risk profile of general purpose AI models is changing as capabilities advance and scale of deployment increases. At the same time, the models carry a few characteristics that pose distinct challenges in governing them. There is currently no solution to ensure that general purpose AI models robustly behave as intended. Advanced model capabilities imply that these models can be used for ever more complex tasks and operate in a wider range of contexts, often advancing the current state of the art which is less well-understood with more possibilities to cause harm. AI applications that are based on a general purpose AI model often inherit the risks that originate in design and development of the underlying model. As these models are integrated into an increasing number of applications across a variety of sectors, shortcomings entailed in one model could be scaled to thousands of downstream systems worldwide. Increasingly built with greater agency, they can be deployed more autonomously in more complex tasks and environments, seemingly requiring less human oversight. Even for experts in the field, the pace of progress is surprising.

The European Union has a unique opportunity to mitigate risks stemming from general purpose AI models and establish themselves as global leaders in guiding responsible and safe development and deployment of this fast-evolving technology — with a strong EU AI Act and beyond.

Pegah Maham

Sabrina Küspert