The Digital Services Act is in effect – now what?

Policy Brief

What the establishment of Digital Services Coordinators across the EU means for platform users, researchers, civil society and companies

Executive Summary

The time when online platforms could mostly make up their own rules, with little external oversight, is coming to an end in the European Union (EU). Starting on February 17, 2024, the Digital Services Act (DSA) will apply in its entirety to services such as search engines, online marketplaces, social media sites and video apps, which are used by millions of people every day. This will bring about changes not only for tech companies but also platform users, researchers and civil society organizations.

Exploring each of these groups’ roles and interactions within the EU’s new platform oversight structure, the paper provides a resource on changes ahead and the accompanying short- and long-term responsibilities. It highlights the opportunities and open questions related to such DSA provisions as complaint mechanisms, access to platform data for researchers, trusted flaggers and oversight structures for small and medium-sized platforms.

The benchmark for the DSA is how well it helps people understand and be safe in online spaces. This means improved consumer protection, empowered users in their standing towards tech companies, better safeguards for minors and a clearer grasp of algorithmic systems. Whether people experience such benefits in their daily lives or the DSA becomes only an exercise in bureaucracy with few improvements for platform users now hinges on a novel oversight structure meant to enforce the rules.

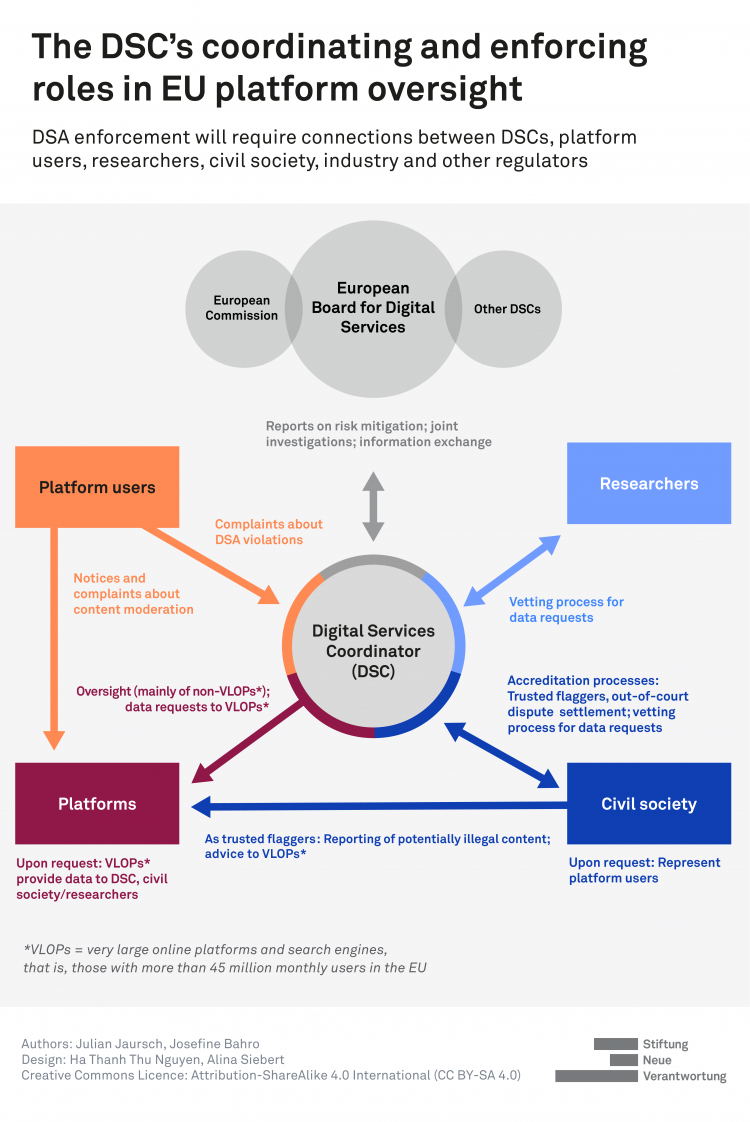

The European Commission plays a significant role in enforcing the DSA. In addition, every member state must designate a specific platform oversight agency, the Digital Services Coordinator (DSC). Crucially, beyond these regulators, a network of other organizations is supposed to help enforce the DSA. Civil society groups are explicitly mentioned in the DSA to support enforcement in numerous ways, from consulting regulators to representing consumers. Platform users have new complaint mechanisms that they can use to bring DSA violations to light. Researchers now have a legally guaranteed pathway to request data from platforms to study potential risks. Platforms are encouraged to work together on industry codes of conduct.

Making the DSA work requires the development of a community of practice that encompasses not only regulators but also platform users, researchers, civil society and companies (see figure below). One way to advance the creation of a community of practice is to build a permanent advisory body at the European Board for Digital Services. The Board brings together all DSCs and the Commission and may consult with outside experts. Having a pluralistic, specialized advisory body there could be particularly helpful in finding suitable risk mitigation measures, which is a task already assigned to the Board. Funding should be made available for this and generally for researchers and advocates to fulfill their roles in the consistent enforcement of the DSA throughout the EU. At the national level, DSCs should also embrace exchanges with non-regulatory organizations to advance their understanding of different types of platforms and associated risks.

DSCs, individually and via the Board, can thus serve as connectors between different groups. However, several obstacles stand in their way. One potential obstacle to a user-friendly and public interest-oriented DSA enforcement is abuse of the rules by either governments or platforms to suppress voices. This risk must be addressed by, for example, establishing parliamentary oversight for regulators, whistleblower protections and transparency reporting. Yet, in addition to preventing the DSA from being too censoring, it is at least equally important to prevent it from becoming a dud – merely creating piles and piles of data that regulators and researchers are too overwhelmed to use because of cumbersome and performative bureaucracy and a lack of resources. DSCs, while not solely responsible, are in a good position to counter such tendencies if they are well-staffed but remain lean, independent yet accountable to the public and embracing their role as a node in an emerging community engaged in DSA enforcement.

Moreover, in the long run, DSCs should be in a good position to contribute to a meaningful and thorough evaluation of the DSA. They not only will have gained first-hand enforcement experience but also can collect feedback and ideas from their network by the time of the first full evaluation in 2027. This must include a review of the DSA’s governance structure, including the Commission’s role, and potential gaps such as coverage of new platforms or ad tech businesses.

Dr. Julian Jaursch