As described in the beginning, we face two challenges in auditing and assessing recommender systems and their risks. First, social media platforms and their recommender systems are composed of multiple products that are embedded in a specific choice architecture and shaped by platform-specific affordances. Second, systemic risks, as mentioned in the DSA, are often described vaguely and abstractly.

The DSA now requires various procedures to audit algorithmic systems and assess how they contribute to systemic risks. In our paper, we have identified different audits and assessments as demanded by the DSA (see Section 4.1):

-

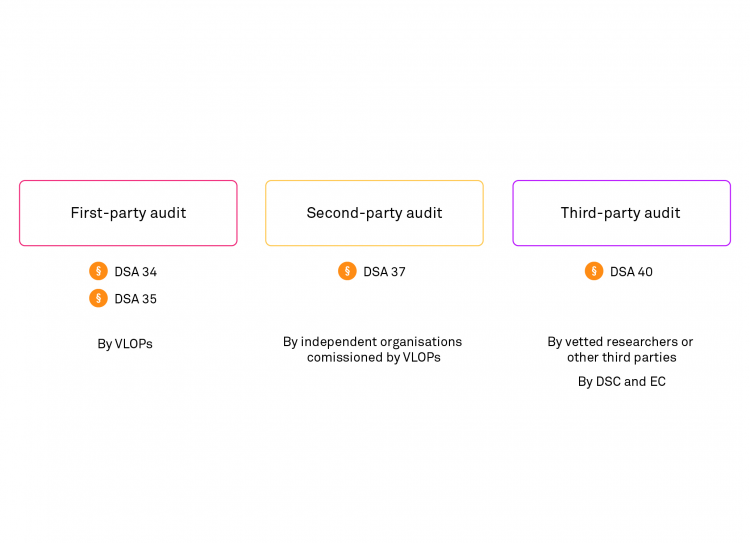

First-party ‘risk assessments’ are conducted by VLOPs internally (i.e., Article 34, DSA) and published once a year.

-

‘DSA compliance audits’ are a type of second-party audit conducted by external contractors. It is unclear whether these include any technical aspects.

-

Third-party ‘risk assessments’ or ‘impact assessments’ are carried out by external and independent researchers (i.e., Article 40, DSA).

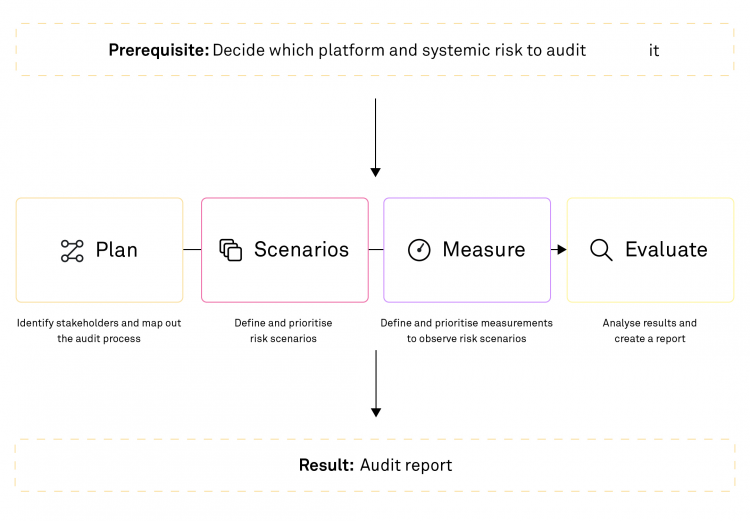

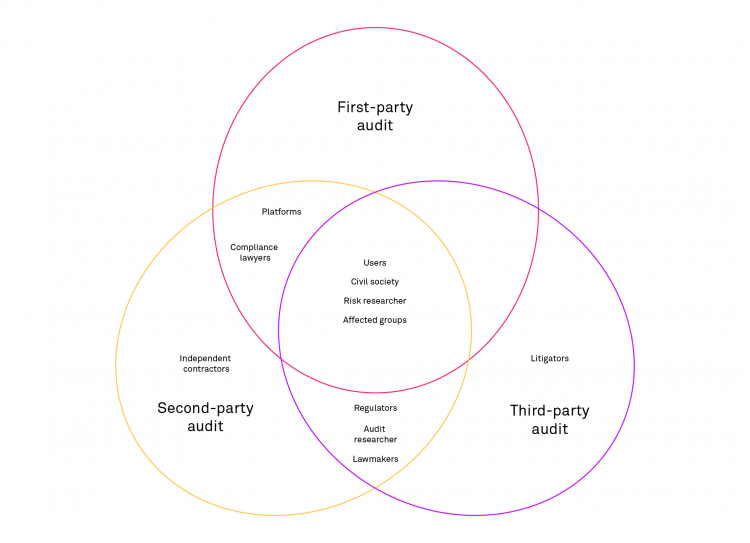

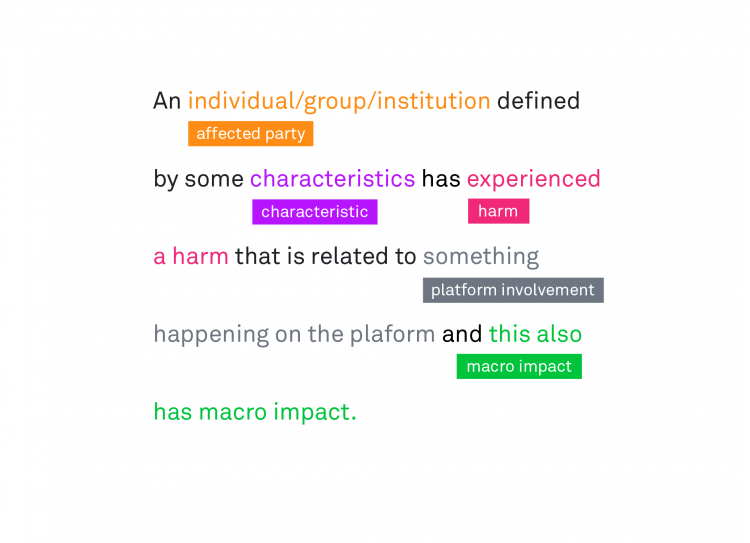

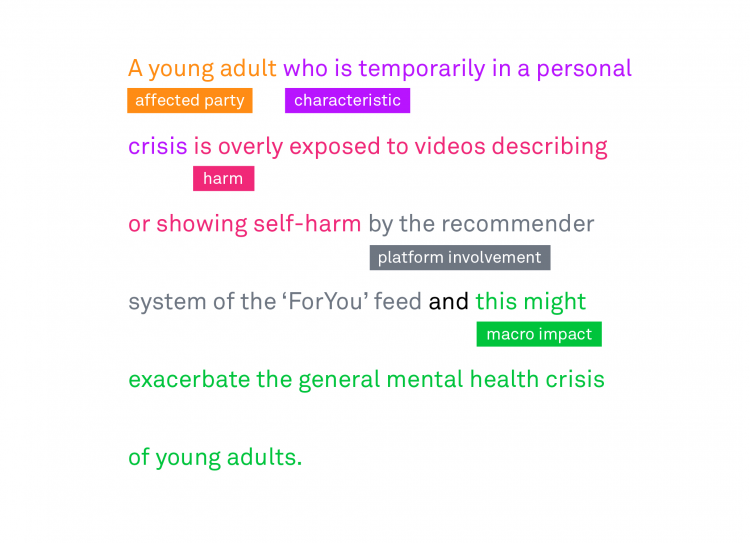

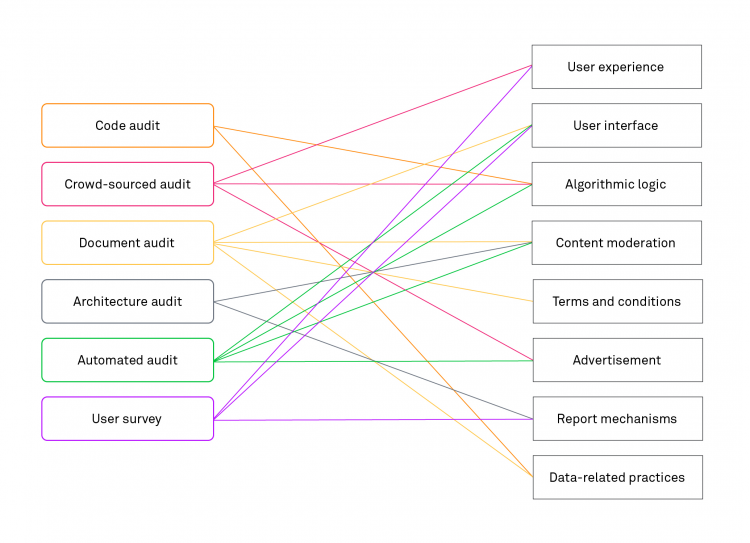

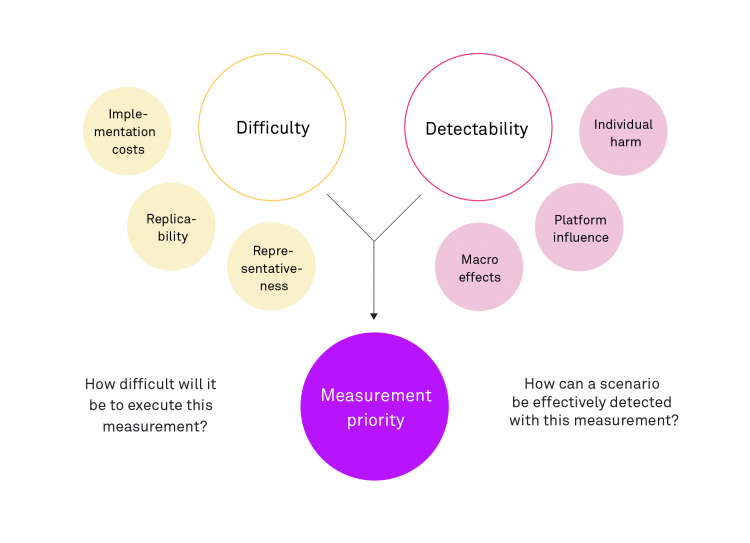

These required audits and risk assessments are—albeit important—snapshots because the platforms and the way users engage with content continuously evolve. Furthermore, to investigate systemic risks, you must bear in mind that they are an interplay between individual and societal phenomena, with platform-specific risks induced by their affordances and recommender systems. This tension and the intrinsic complexity of social and psychological phenomena require that various relevant parties participate in the audit. For this reason, we propose a multistakeholder audit process breaking down the abstract systemic risks into concrete scenarios and then conducting multiple types of audits to ensure that the effects within and outside the platform are studied (e.g., by combining user surveys or expert interviews with an automated audit).

The present paper provides guidelines to operationalise systemic risks, set up a process to make well-founded decisions on suitable audit methods and enable comparability between different approaches. The risks-scenario-based audit process meets requirements for first-, second- and third-party audits. It helps auditors to set up an audit process which brings together various elements, issues, stakeholders and audit types. The audit process is based on four steps described in Sections 5-9 and explains how the different components are related to DSA requirements. Our guidelines can also help you revisit scenarios after a change to the platform has been implemented, allowing you to assess whether the risk mitigations deployed have had the desired effect.

We explicitly suggest that the risk-scenario-based audit process we have put forward in this paper be considered a contribution to the ongoing discussions on how the DSA should be implemented. At the time of writing this, several pieces of secondary legislation were being developed. Among them were an implementing act on a transparency reports template, a delegated act on independent external audits and guidelines on specific risks related to risk mitigation.[86]

Below are some recommendations we offer for the enforcement of the DSA. We detected the issues these recommendations seek to address while developing the risk-scenario-based approach for audits of social media platforms and during our exchanges with experts and other stakeholders.

Prevent ‘audit-washing’ through compliance audits

The DSA requires independent contractors to audit if VLOPs violate their due diligence obligations. Therefore, the European Commission is currently working on the delegated act on independent audits according to Article 37—the audits we call compliance audits. An internal presentation by the European Commission suggests that these independent compliance audits should be part of a life cycle of risk management supervision by the European Commission. Another part of this life cycle is risk assessments, which, in turn, are also an object of scrutiny for compliance audits. However, it is still unclear who these independent auditors are going to be and if compliance audits must encompass the auditing of the VLOPs’ internal risk assessments for quality.

Therefore, we recommend the following three measures:

-

Make sure that independent compliance audits of external contractors also evaluate the quality and methods of the VLOPs’ internal risk assessments.

-

Ensure that these independent second-party audits include independent researchers and experts on systemic risks and algorithm audits as stakeholders in their auditing processes.

-

Ideally, second-party audits include independent risk assessments themselves using the risk scenario audit process that we have described as a facilitating tool.

These requirements are essential to ensure that second-party audits are not rendered mere ‘audit-washing’ tools for the platforms, as the audit researchers Ellen Goodman and Julia Trehu suggested.[87]

Extended transparency and observability of risk assessments are necessary

The topic of data access and transparency is worthy of a paper of its own, which is why we cannot go in-depth on this issue here. However, we briefly want to discuss several points with strong connections to risk assessments and audits in the following sections.

As we outlined in Section 4.1, VLOPs are obliged under Article 42(4)(a) to report the results of their internal risk assessments to the digital services coordinator of establishment and the European Commission in accordance with Article 34 and to make them publicly available.

For transparency and to enable their scrutiny by any interested party, VLOPs should also be required to provide a thorough methodological description of the assessments they have carried out, including their hypotheses, information about which metrics were considered and if and how they assessed their own internal experiments (such as A/B-testing). Making this available to the public would enable reviews of these first-party audits by third-party auditors, which can only result in heightened accountability. This is already vaguely stated in Article 42. However, we advocate for these audit processes, especially the tested scenarios, to be disclosed.

Observability should be the goal of transparency and data access obligations

As described above, platforms and how users engage with them continuously evolve. Therefore, the information required to conduct audits and, thus, understand the impact that regulations and their enforcement have had on risk mitigation measures is also constantly changing. If transparency and data access obligations do not reflect the dynamic nature of the issue and instead focus on static metrics, platforms might try to optimise these numbers instead of actually mitigating risks.

Therefore, the goal of transparency and data access obligations should be to maximise observability, that is, our capacity to scrutinise these platforms over time, their processes and, thus, being able to detect their evolution and impacts on individuals, groups and society—also across different platforms.

This is why, data access needs to include real-time data. Although this is already mentioned in the DSA (e.g., Recital 98), platforms can backtrack if providing access is technically complicated. Instead, platforms should be required to give access to and provide the technical means for auditors to analyse the platforms in real time. This must be further specified in the delegated and implementing acts because this is an important aspect towards achieving platform observability.

This also shows that transparency reports need standards. Experiences with the transparency reports under the Network Enforcement Act in Germany have shown that these reports are difficult to compare and assess, for example, because they do not disclose the societal scale[88] or explain why these harmful exposures are happening. Also, these reports sometimes work with different metrics, even within one report, or do not disclose the basic population of the provided prevalence numbers. We recommend more precise specifications for DSA transparency reports (e.g., in accordance with the European Digital Media Observatory (EDMO)[89] and Algorithm Watch[90]). In line with this, we strongly support the recommendation by the Integrity Institute, an American nonprofit organisation working to improve social platforms, calling for these reports to disclose the entire life cycle of harmful content.[91]

Maintain critical supervision

The European Commission and Digital Service Coordinators are responsible for the supervision of transparency and audit reports. We worry that any oversight approach based on key performance indicators or similar metrics (e.g., focusing on the number of deleted posts or comparable high-level data in dashboards) might distract from what should be the focus of interest: actual risks and specific scenarios. Therefore, we call for quality-based approaches to supervision, which not only check whether risk assessments were conducted, but also evaluate them.[92]

As a civil society organisation, we want to contribute to setting high standards for the societal scrutiny of the recommender systems of social networks and similar online platforms. Audits that are mainly conducted internally as first-party audits and only reviewed for compliance by second-party auditors will fail to effectively minimise the systemic risks that these technologies pose. Therefore, we see our approach as a first attempt at standardising the audit processes already present in the DSA, one that seeks to achieve comparability. We believe that the approach outlined in this document can contribute to furthering the operationalisation of the audits and risk assessments that the DSA requires and those carried out beyond its scope. Moreover, we hope it can also become part of the toolbox that every concerned organisation or authority has at hand to watch over the pressing challenges of our constantly evolving digital public sphere.